INTRODUCTION

Hyperconvergence has dramatically transformed data center landscape over the past few years. New technologies are being developed, good old ones are being improved… We live in exciting times! And as data centers are becoming more reliable and powerful, it is important to get more out of the hardware in use: nobody likes to leave money on the table! Intel, Mellanox and StarWind have teamed up to develop a Hyper-V highly available cluster where you’ll get awesome performance without compromising ease of manageability of the environment. This article discusses the measurements in brief, showcasing the recent results.

WHO’S IN?

Intel has been dominating the IT landscape since the mid-’70s and has no intention to stop anytime soon. Its solutions are the lifeblood of virtually any IT and technological innovation. Intel Platinum CPUs are designed to make cloud computing happen and handle sophisticated real-time analytics & processing for mission-critical applications. Intel Optane NVMe flash is nothing but the world’s fastest storage to date.

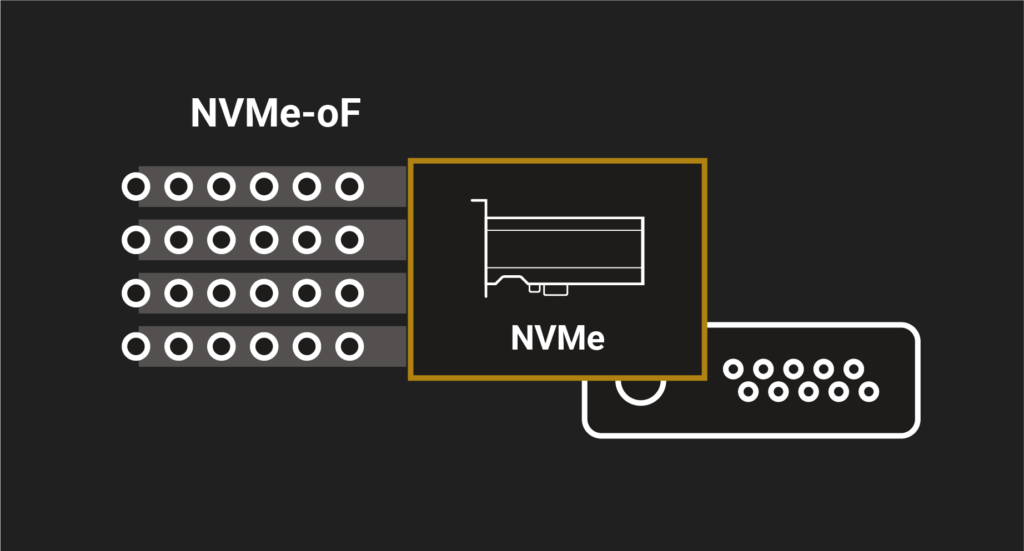

Mellanox is the best when it comes to network performance. They pioneered Remote Direct Memory Access (RDMA) and NVMe over Fabrics (NVMe-oF) which are game changers when it comes to hyperconvergence. Their networking gear provides exceptionally high data transfer rates at the lowest possible latency, enabling today’s Enterprises to meet the demands of data explosion. In a nutshell: Things that work for Azure work for everybody. In this study, Mellanox was responsible for networking, i.e., server interconnections, Network Interface Cards (NICs), and switches.

StarWind creates a wide range of solutions that grant unprecedented uptime and performance of mission-critical applications. This company designs both hardware and software solutions well-known for their reliability and interoperability with off-the-shelf hardware & software. StarWind enables users to avoid any limitations of the traditional hyper-converged infrastructure that drive up costs or cause hardware underutilization. In this study, StarWind was responsible for the shared storage capable of delivering most of the raw IOPS within the reasonable latency (10 µs or less compared to the local NVMe device); no other vendor basically can do that.

Supermicro servers are a modern Linux equivalent in the world of hardware. This company blends the innovations within their products; they are working at the avant-garde of IT. Supermicro did a lot to make this project happen. They provided the newest motherboards and SuperServer units.

HYPERVISOR CHOICE

StarWind’s hypervisor choice was Microsoft Hyper-V and they own an explanation (or two). First, Microsoft Hyper-V is one of the most common and robust hypervisors these days; most of the virtualized environments in the world are built on Hyper-V. Second, StarWind has always been more a Windows shop, it has been designing solutions expanding Hyper-V capabilities for years.

Good enough but there must be another reason because we all know of hypervisors which work better for high performance computing than Hyper-V. KVM is faster, more efficient; it powers environments where all innovations happen! VMware vSphere is a de facto standard of Enterprise-grade virtualization. It feels like Hyper-V is left somewhere in between. So why Hyper-V over ESXi and KVM? KVM already has the whole NVMe over Fabrics (NVMe-oF) stack including both target and initiator; there’s basically nothing to improve here except maybe for management. Second bet was VMware vSphere because it did not have NVMe-oF target and initiator when the study was held, but VMware refused to cooperate on this project with StarWind. Given this all, Hyper-V became the only option. Windows Server itself has some flaws, so StarWind being a software provider in this study had to come up with a way to improve it.

HOW STARWIND MAKES WINDOWS SERVER BETTER

StarWind has built the test environment on Windows Server 2019 and StarWind Virtual SAN (VSAN). StarWind VSAN is the only software-defined storage (SDS) solution for Windows to date that can use PCIe flash effectively; it enables applications running on the shared NVMe storage to get up to 90% of raw IOPS. This became possible thanks to StarWind NVMe-oF Initiator – the industry’s first all-software NVMe-oF initiator for Windows. The solution was said to work only with StarWind VSAN when the study was carried out; support of other SDS solutions may be introduced later. Mellanox ConnectX NICs starting with ConnectX-3 support NVMe-oF too, but StarWind NVMe-oF Initiator is a bigger thing as it is an all-software solution for Windows. First of its kind! Here is my study revealing how efficient StarWind NVMe-oF Initiator may be.

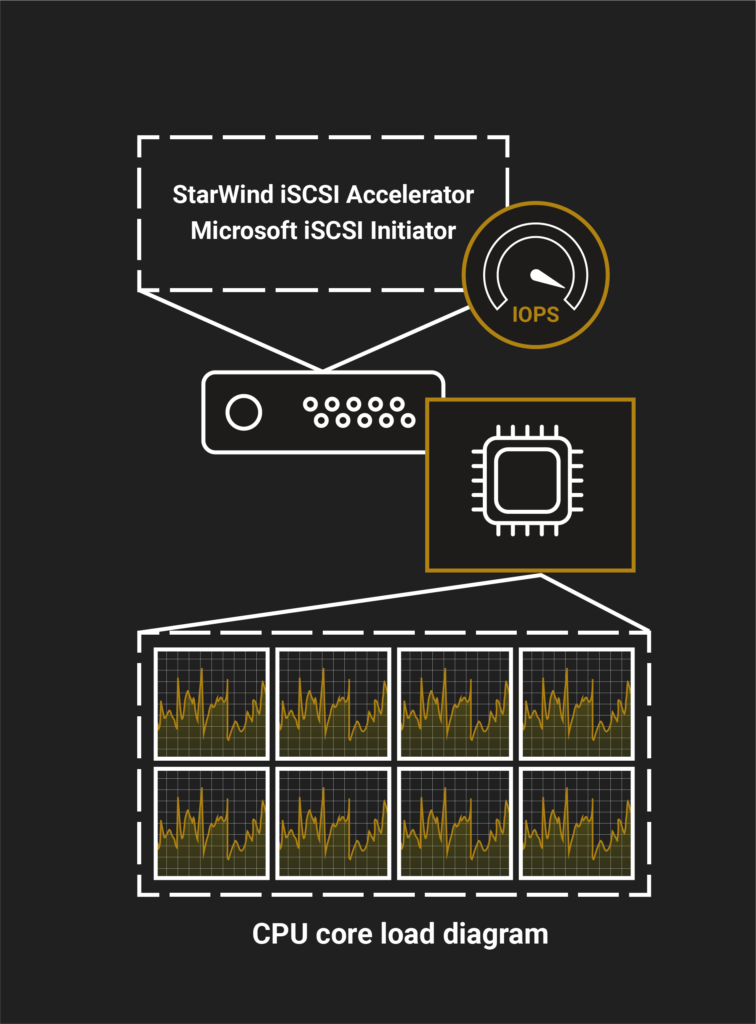

StarWind also developed StarWind iSCSI Accelerator – a driver, allowing for better workload distribution in Hyper-V environments. All CPU cores are utilized effectively with the Accelerator in place; no cores are overwhelmed while others idle. This solution was used in the study to expand Microsoft iSCSI Initiator capabilities every time the storage was presented over iSCSI. I am going to give this solution a shot one day.

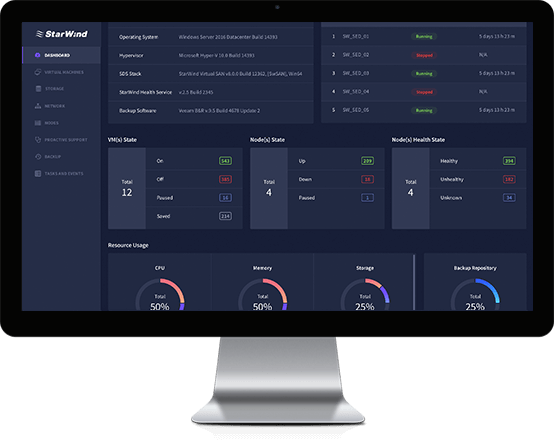

On top of that all, as an answer to Windows Admin Center, StarWind designed StarWind Command Center – a web-based graphical user interface (GUI), providing all the necessary information on system health and performance in comprehensive, customizable dashboards.

SETTING HCI INDUSTRY IOPS RECORD

Stage 1: Cache-less all-NVMe iSCSI shared storage

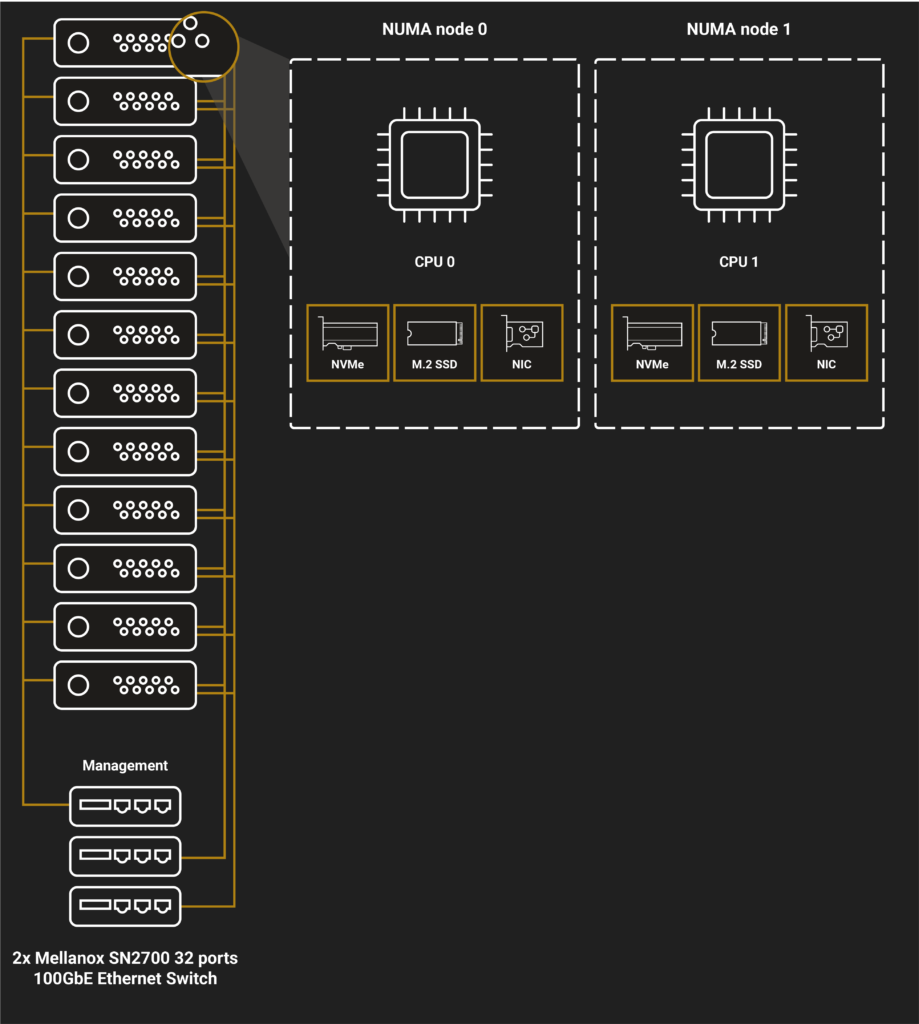

To start with, here is what the configuration of each server was like for this study:

- Platform: Supermicro SuperServer 2029UZ-TR4+

- CPU: 2x Intel® Xeon® Platinum 8268 Processor 2.90 GHz. Intel® Turbo Boost ON, Intel® Hyper-Threading ON

- RAM: 96GB

- Boot storage: 2x Intel® SSD D3-S4510 Series (240GB, M.2 80mm SATA 6Gb/s, 3D2, TLC)

- Storage capacity: 2x Intel® Optane™ SSD DC P4800X Series (375GB, 1/2 Height PCIe x4, 3D XPoint™). The latest available was firmware installed.

- RAW capacity: 9TB

- Usable capacity: 8.38TB

- Working set capacity: 4.08TB

- Networking: 2x Mellanox ConnectX-5 MCX516A-CCAT 100GbE Dual-Port NIC

- Switch: 2x Mellanox SN2700 32 Spectrum ports 100GbE Ethernet Switch

The main purpose of this test was to demonstrate how “fast” cache-less iSCSI shared storage can be.

StarWind considers iSCSI to be an inappropriate protocol for presenting flash over the network: the latency time is very high and hardware utilization efficiency is weak. To check these claims, caching was disabled to avoid altering performance. Performance was tested with different workloads.

Find the setup diagram below.

NOTE: On every server, each NUMA node had 1x Intel® SSD D3-S4510, 1x Intel® Optane™ SSD DC P4800X Series, and 1x Mellanox ConnectX-5 100GbE Dual-Port NIC. Such configuration enabled to maximize hardware utilization efficiency.

Here are the results.

| Run | Parameters | Result |

|---|---|---|

| Maximize IOPS, all-read | 4 kB random, 100% read | 6,709,997 IOPS1 |

| Maximize IOPS, read/write | 4 kB random, 90% read, 10% write | 5,139,741 IOPS |

| Maximize IOPS, read/write | 4 kB random, 70% read, 30% write | 3,434,870 IOPS |

| Maximize throughput | 2 MB sequential, 100% read | 61.9GBps2 |

| Maximize throughput | 2 MB sequential, 100% write | 50.81GBps |

1 – 51% performance out of theoretical 13,200,000 IOPS

2 – 53% bandwidth out of theoretical 112.5GBps

StarWind VSAN was not 100% loaded and could saturate more performance. That’s why 2 more Intel Optane cards were added.

| Run | Parameters | Result |

|---|---|---|

| Maximize IOPS, all-read | 4 kB random, 100% read | 6,709,997 IOPS1 |

| Maximize IOPS, read/write | 4 kB random, 90% read, 10% write | 5,139,741 IOPS |

| Maximize IOPS, read/write | 4 kB random, 70% read, 30% write | 3,434,870 IOPS |

| Maximize throughput | 2 MB sequential, 100% read | 61.9GBps |

| Maximize throughput | 2 MB sequential, 100% write | 50.81GBps |

| Maximize throughput +2NVMe SSD | 2 MB sequential, 100% read | 108.38GBps2 |

| Maximize throughput +2NVMe SSD | 2 MB sequential, 100% write | 100.29GBps |

1-51% performance out of theoretical 13,200,000 IOPS

2-96% bandwidth out of theoretical 112.5GBps

Stage 2: SATA SSD iSCSI shared storage + write-back cache

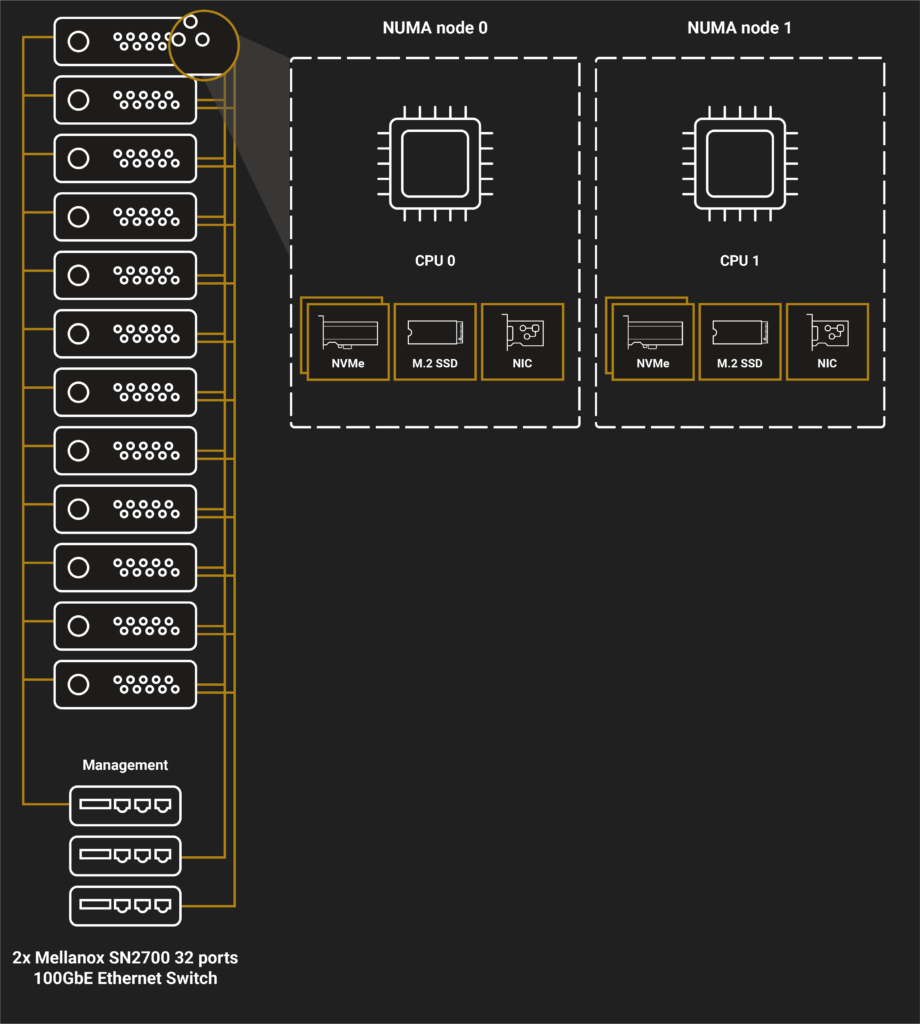

Server configuration:

- Platform: Supermicro SuperServer 2029UZ-TR4+

- CPU: 2x Intel® Xeon® Platinum 8268 Processor 2.90 GHz. Intel® Turbo Boost ON, Intel® Hyper-Threading ON

- RAM: 96GB

- Boot and Storage Capacity: 2x Intel® SSD D3-S4510 Series (240GB, M.2 80mm SATA 6Gb/s, 3D2, TLC)

- Write-Back Cache Capacity: 4x Intel® Optane™ SSD DC P4800X Series (375GB, 1/2 Height PCIe x4, 3D XPoint™). The latest available firmware installed

- RAW capacity: 5.7TB

- Usable capacity: 5.4TB

- Working set capacity: 1.32TB

- Networking: 2x Mellanox ConnectX-5 MCX516A-CCAT 100GbE Dual-Port NIC

- Switch: 2x Mellanox SN2700 Spectrum 32 ports 100 GbE Ethernet Switch

This test demonstrates the maximum performance of an all-flash array, where Intel Optane drives are utilized for write-back caching. The cluster was configured to run workload from M.2 SATA flash drives to chase for both IOPS and bandwidth. At this stage, the all-NVMe storage array was also presented over iSCSI.

The setup scheme is provided below.

NOTE: On every server, each NUMA node featured 1x Intel® SSD D3-S4510, 2x Intel® Optane™ SSD DC P4800X Series, and 1x Mellanox ConnectX-5 100GbE Dual-Port NIC. Such configuration enabled to maximize hardware utilization efficiency.

Here are the results.

| Run | Parameters | Result |

|---|---|---|

| Maximize IOPS, all-read | 4 kB random, 100% read | 26,834,060 IOPS1 |

| Maximize IOPS, read/write | 4 kB random, 90% read, 10% write | 25,840,684 IOPS |

| Maximize IOPS, read/write | 4 kB random, 70% read, 30% write | 16,034,494 IOPS |

| Maximize throughput | 2 MB sequential, 100% read | 116.39GBps2 |

| Maximize throughput | 2 MB sequential, 100% write | 101.8GBps |

1 – 101.5% performance out of theoretical 26,400,000 IOPS

2 – 103.5% performance out of theoretical 112.5GBps

Stage 3: All-NVMe shared storage presented over Linux SPDK NVMe-oF target + StarWind NVMe-oF Initiator

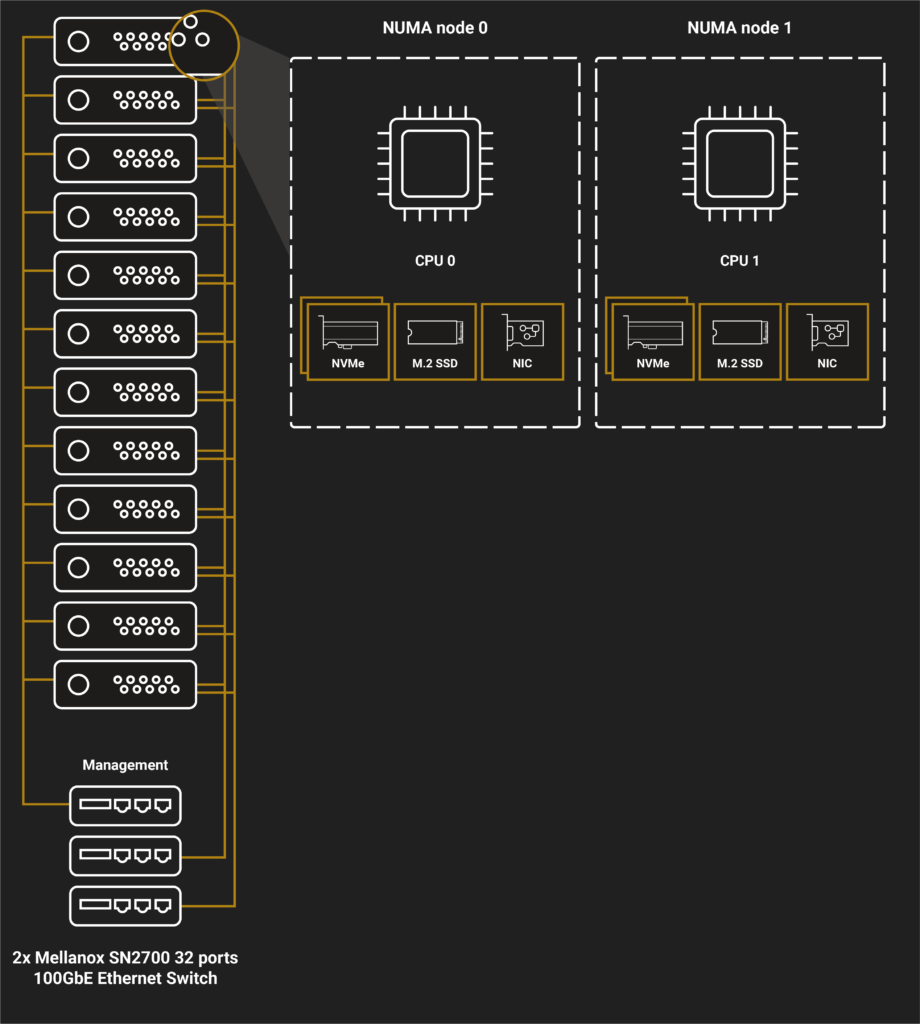

Here is the setup configuration:

Platform: Supermicro SuperServer 2029UZ-TR4+

CPU: 2x Intel® Xeon® Platinum 8268 Processor 2.90 GHz. Intel® Turbo Boost ON, Intel® Hyper-Threading ON

RAM: 96GB

Boot storage: 2x Intel® SSD D3-S4510 Series (240GB, M.2 80mm SATA 6Gb/s, 3D2, TLC)

Storage capacity: 4x Intel® Optane™ SSD DC P4800X Series (375GB, 1/2 Height PCIe x4, 3D XPoint™). The latest available firmware installed.

RAW capacity: 18TB

Usable capacity: 16.8TB

Working set capacity: 16.8TB

Networking: 2x Mellanox ConnectX-5 MCX516A-CCAT 100GbE Dual-Port NIC

Switch: 2x Mellanox SN2700 Spectrum 32 ports 100GbE Ethernet Switch

This test demonstrates how efficiently an all-flash array can be presented over NVMe-oF. StarWind showcased its implementation of NVMe-oF: Linux SPDK NVMe-oF target and StarWind NVMe-oF Initiator. Caching was disabled to make performance measurements accurate.

Here is how servers were connected.

NOTE: On every server, each NUMA node featured 1x Intel® SSD D3-S4510, 2x Intel® Optane™ SSD DC P4800X Series, and 1x Mellanox ConnectX-5 100GbE Dual-Port NIC. Such configuration enabled to maximize hardware utilization efficiency.

Performance was tested both in bare-metal inside the VM with the same workloads.

| Run | Parameters | Result | |

|---|---|---|---|

| RAW device | Maximize IOPS, all-read | 4 kB random, 100% read | 22,239,158 IOPS1 |

| Maximize IOPS, read/write | 4 kB random, 90% read, 10% write | 21,923,445 IOPS | |

| Maximize IOPS, read/write | 4 kB random, 70% read, 30% write | 21,906,429 IOPS | |

| Maximize throughput | 2 MB sequential, 100% read | 119.01GBps22 | |

| Maximize throughput | 2 MB sequential, 100% write | 101.93GBps | |

| VM-based | Maximize IOPS, all-read | 4 kB random, 100% read | 20,187,670 IOPS3 |

| Maximize IOPS, read/write | 4 kB random, 90% read, 10% write | 19,882,005 IOPS | |

| Maximize IOPS, read/write | 4 kB random, 70% read, 30% write | 19,229,996 IOPS | |

| Maximize throughput | 2 MB sequential, 100% read | ||

| Maximize throughput | 2 MB sequential, 100% write | 102.25GBps | |

1 – 84% performance out of theoretical 26,400,000 IOPS

2 – 106% performance out of theoretical 112.5GBps

3 – 76% performance out of theoretical 26,400,000 IOPS

4 – 105.5% performance out of theoretical 112.5GBps

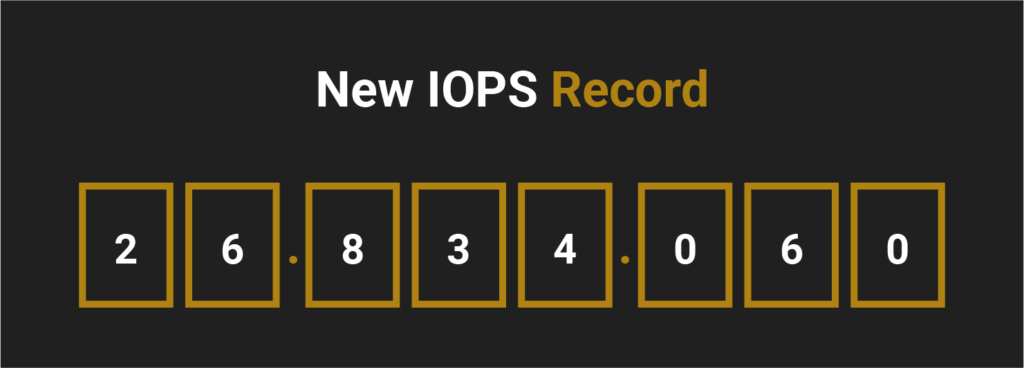

WRAP

Long story short, here are the amounts of IOPS obtained in all those three tests.

| HCI industry’s high scores | ||

|---|---|---|

| 1 | Cache-less iSCSI shared storage | 6 709 997 |

| 2 | SATA SSD iSCSI shared storage + NVMe write-back cache | 26 834 060 |

| 3 | Linux SPDK NVMe-oF Target + StarWind NVMe-oF Initiator | 22 239 158 |

Note that Microsoft reached 13.8 M IOPS with their setup. And here questions about hardware utilization efficiency arise.

Yes, I know of Huawei’s success, but I do not know that much about the setup, so I do not discuss their study in my article.

If environments of the future will use NVMe drives (they are very likely), Intel, Mellanox, StarWind and Supermicro have made this future closer by showing how to utilize this type of flash effectively.